Battle of the HTTP Protocols!

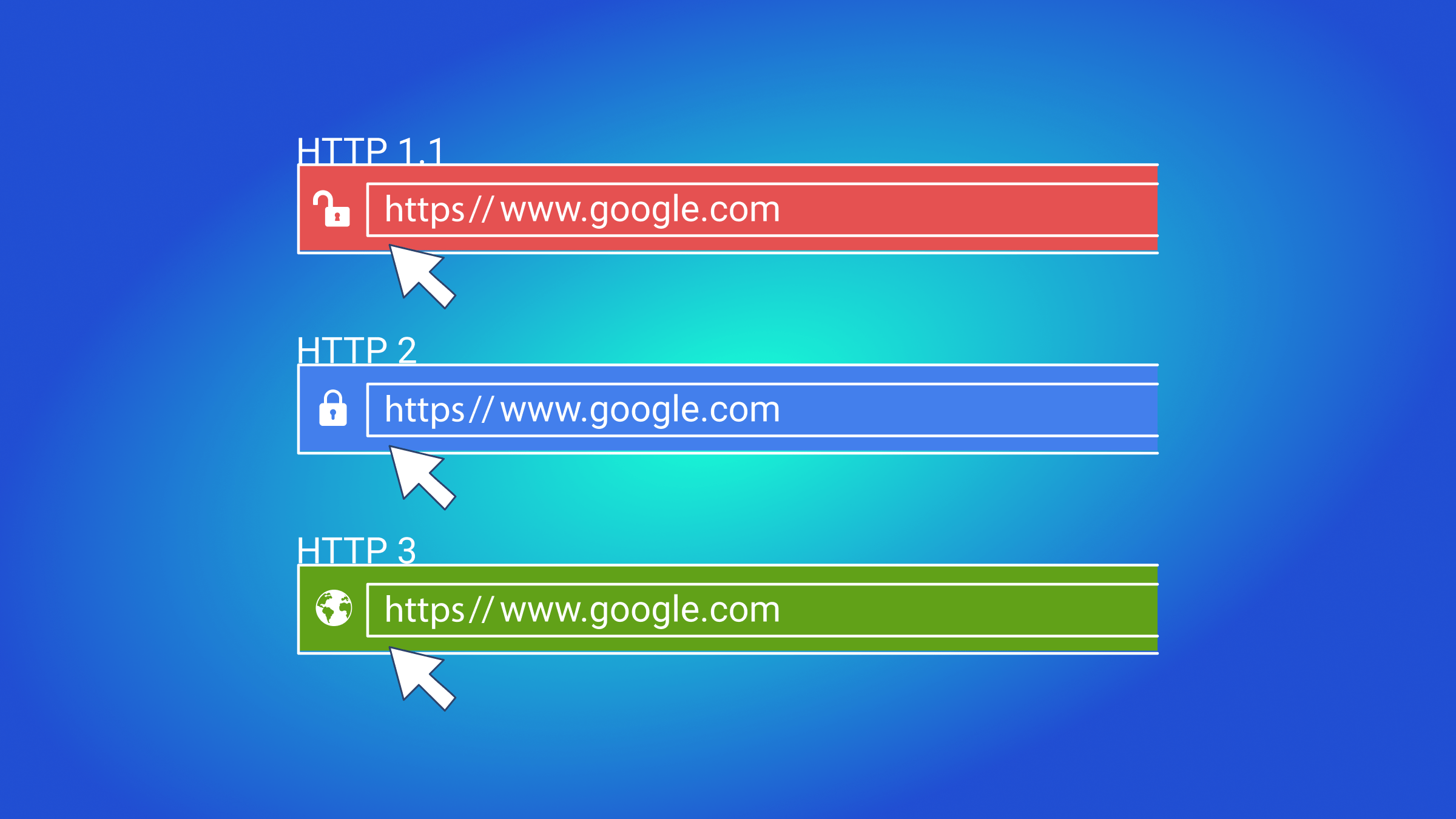

This article takes us through the “Battle of the Protocols”, contrasting the diverse versions of HTTP, to learn their strengths and limitations. It examines the development of web protocols and their influence on the contemporary internet user experience, starting with the fundamental ideas of HTTP/1.1 and moving on to the ground-breaking inventions of HTTP/2 and HTTP/3.

Discover how at OpenReplay.com.

The development of web protocols has greatly influenced the digital environment we live in today. The most notable developments are HTTP/1.1, HTTP/2, and HTTP/3, which each mark a turning point in the effort to achieve faster, more effective, and more secure web communication.

Before dissecting the different HTTP protocols, let’s provide answers to some of the fundamental and frequently asked questions about HTTP protocol.

Here are some frequently asked questions about the HTTP protocol:

What is a Protocol?

A protocol is a collection of guidelines that control the flow of data back and forth between systems or devices. It makes it possible to have efficient communication across networks by defining the format, organization, and flow of messages that are exchanged.

The purpose and intricacy of protocols differ, ranging from basic email protocols to intricate web browsing protocols. In the end, protocols serve as the cornerstone of contemporary digital communication, enabling smooth online engagement.

What is HTTP?

Hypertext Transfer Protocol is referred to as HTTP. It is an application layer protocol that is used to send hypermedia documents across the Internet, such as HTML files.

How does HTTP work?

HTTP works on a client-server architecture, in which web browsers and other clients send resource requests to servers, who then provide the desired data. The stateless exchange of requests and answers makes each transaction independent of earlier exchanges.

What are the different methods in HTTP?

The action to be taken on a resource is specified by one of HTTP’s several verbs or methods. GET (retrieve data), POST (submit data), PUT (update data), DELETE (delete data), and other methods are frequently used.

What is the difference between HTTP and HTTPS?

A secure variant of HTTP, known as HTTPS (Hypertext Transfer Protocol Secure), encrypts data while it is transferred between clients and servers. HTTPS is frequently utilized for secure communication, including online banking and e-commerce.

What is the role of headers in HTTP?

Additional data transmitted with HTTP requests and responses are known as HTTP headers. Headers include information about the request or response’s metadata, including the type of content, encoding, cookies, and caching instructions.

What is the significance of HTTP status codes?

The success or failure of an HTTP request is indicated by HTTP status codes. Typical status codes that indicate the result of the request are 200 (OK), 404 (Not Found), 500 (Internal Server Error), and others.

How does HTTP caching work?

Clients can locally store copies of web resources to lower server load and latency thanks to HTTP caching. The time a resource can be cached and the circumstances under which it needs to be revalidated with the server are indicated by caching directives in HTTP headers.

HTTP/1: The Foundation

Operating over Transmission Control Protocol (TCP), HTTP/1 was a major milestone in the development of web protocols, published in 1996. Because it relied on distinct TCP connections for every request, it was inefficient and resulted in higher latency and worse performance, particularly as web pages became more complicated.

The restricted number of concurrent connections per server led to scalability problems. Afterward, HTTP was redesigned with the introduction of HTTP/1.1 to circumvent these restrictions.

Remark: The comparison does not include HTTP/1 because it was an older version with built-in constraints, mostly related to resource consumption, scalability, and performance, which were greatly improved upon and addressed in HTTP/1.1, HTTP/2, and HTTP/3.

HTTP/1.1: The Evolution

In 1997, HTTP/1.1 quickly followed. By eliminating the need to create new TCP connections for every request, it introduced the “keep alive” feature, which reduces latency by enabling connections to be reused for many requests. But another aspect of HTTP/1.1, HTTP pipelining, caused problems with head-of-line blocking.

Although pipelining potentially speeds up communication by enabling many requests to be sent without waiting for each response, performance is decreased overall when there is a delay or obstruction in one request because it impacts subsequent requests on the same connection. Modern web browsers use numerous simultaneous TCP connections to the same server to lessen the impact of delays on individual requests. Thanks to this, requests can now be sent concurrently. This method improves web page loading speed by striking a compromise between the necessity for parallelism and the complexity of handling multiple connections.

Features of HTTP/1.1

With the introduction of permanent connections in HTTP/1.1, overhead was reduced by enabling the transmission of numerous requests and responses over a single connection.

The Host header also permits virtual hosting, which allows several websites to be served on a single server.

Chunked transfer encoding facilitates data transfer in segments, which is advantageous for streaming or transmitting big files with erratic durations.

Furthermore, HTTP/1.1 improved cache management and content negotiation techniques, providing more precise control over content selection and caching strategies and enhancing performance and dependability for contemporary web applications.

Strengths of HTTP/1.1

Significant improvements were introduced with HTTP/1.1, such as Chunked transfer encoding for flexible data transport, the Host header for virtual hosting, and persistent connections for lower overhead. Its main strengths are backward compatibility, permanent connections that lower latency, and broad support from web servers, clients, and proxies.

Additionally, improved content negotiation features let servers provide customized content according to client preferences, maximizing resource delivery and enhancing network performance and user experience.

Limitations of HTTP/1.1

Because HTTP/1.1 lacks internal authentication and encryption, it is vulnerable to head-of-line blocking and security weaknesses that allow for message modification and eavesdropping.

Also, because HTTP/1.1 is sequential, it still has considerable latency even with persistent connections, which makes resource retrieval inefficient.

Finally, HTTP/1.1’s limited multiplexing capabilities result in less efficient resource use, particularly when several requests are sent at once.

HTTP/2: The Transition

When HTTP/2 was released in 2015, it addressed the shortcomings of HTTP/1.1 and added new functionality, which made it a major improvement over HTTP/1.1. It improved network resource use by introducing HTTP streams, allowing numerous requests and responses multiplexed over a single TCP connection. Because HTTP/2 streams are independent, as opposed to HTTP/1.1 pipelining, head-of-line blocking is eliminated, improving performance and lowering latency.

Furthermore, HTTP/2 added server push functionality, enabling servers to forward resources to clients in advance, improving performance and reducing page load times. In general, HTTP/2 prioritizes performance, reduced latency, and improved user experience, making it a more reliable and efficient protocol for contemporary web applications.

HTTP/2 brought significant improvements over HTTP/1.1 by addressing its limitations and introducing new features.

Features of HTTP/2

Multiplexing for effective resource use, header compression for overhead reduction using HPACK, server push for proactive resource delivery, and stream prioritization for better resource allocation and enhanced user experience are all introduced by HTTP/2.

Strengths of HTTP/2

It adds multiplexing, which lowers latency and boosts efficiency by enabling several requests and responses to be transmitted concurrently over a single connection.

By compressing HTTP headers, HTTP/2 minimizes overhead and allows for faster data transfer and less bandwidth consumption.

Because the protocol supports server push, servers can proactively provide resources to clients before they are requested, improving user experience and speeding up page loads.

HTTP/2 enhances performance by prioritizing requests, enabling the delivery of essential resources first, resulting in faster page rendering.

It improves security by requiring the use of HTTPS, guaranteeing encrypted data transmission, and reducing security concerns related to plaintext communication.

Limitations of HTTP/2

Head-of-line blocking still happens sometimes, causing page loads to lag when one resource on the same connection takes longer than others.

Although header compression lowers overhead, its compression and decompression processes demand more processing power, which could affect server performance.

Because HTTP/2 relies on HTTPS for security, websites that do not support HTTPS cannot take advantage of HTTP/2’s security features, which could leave them open to assaults.

Some servers and clients may find it difficult to adopt HTTP/2 due to its complexity. This could cause compatibility problems and increase the resources needed for deployment and upkeep.

Even with these enhancements, HTTP/2 might not be able to completely solve all performance problems, especially in situations where there is a lot of delay or little network capacity.

HTTP/3: The Revolution

After debuting as a draft in 2020, HTTP/3 is a significant advancement in web protocols since it replaces the conventional TCP transport protocol with QUIC (Quick UDP Internet Connections).

By allowing many streams to share a connection without requiring extra handshakes, QUIC emphasizes stream multiplexing and maximizes resource use. Most importantly, it ensures independent stream delivery even in the event of packet loss or congestion, eliminating head-of-line blocking at the transport layer, improving performance, and lowering latency.

Moreover, QUIC’s usage of Connection ID facilitates smooth switching between IP addresses and network interfaces, which is very helpful for users on the go. HTTP/3, driven by QUIC, is gaining momentum despite its recent inception because it prioritizes performance, dependability, and adaptability to meet the demands of the current internet.

Features of HTTP/3

The QUIC protocol—which underpins HTTP/3—offers more effective multiplexing and reduced latency by treating streams as first-class citizens, improving reliability with integrated error correction and congestion control, and facilitating faster connection establishment without the need for the traditional TCP handshake.

Strengths of HTTP/3

It uses the QUIC transport protocol, which improves security and performance by providing features like multiplexing and connection migration, built-in encryption, and decreased latency.

By leveraging QUIC’s multiplexing features, HTTP/3 addresses HTTP/2’s head-of-line blocking problem by enabling the processing of many requests and responses independently, which lowers latency and boosts efficiency.

By facilitating the smooth transition of connections between various network interfaces or IP addresses, QUIC’s connection migration function improves dependability and resistance to network changes.

The protocol prioritizes stream multiplexing and prioritization to improve user experience and page loading speed, guaranteeing the timely delivery of essential resources.

Because HTTP/3 uses User Datagram Protocol (UDP) rather than TCP, data transfer is faster and more dependable. This lessens the effects of congestion and packet loss and speeds up connection setup times.

Limitations of HTTP/3

Adoption and compatibility may be limited by dependence on UDP in networks or contexts where UDP traffic is banned or given lower priority than TCP.

Although QUIC’s encryption improves security, it can also result in an increased computational burden for encryption and decryption, which could hurt performance, particularly for devices with limited resources.

Due to their complexity, some servers and clients may find it difficult to install and maintain HTTP/3 and QUIC. This could cause compatibility problems and necessitate the use of more resources for deployment and upkeep.

Furthermore, because HTTP/3 is still relatively new, there could not be as much support or compatibility with current tools, protocols, and infrastructure. This could prevent widespread adoption until HTTP/3 matures and becomes more standardized.

Side-by-side comparison of the Protocols

Here’s a side-by-side comparison of HTTP/1.1, HTTP/2, and HTTP/3 based on their features, strengths, and limitations:

| Feature | HTTP/ 1.1 | HTTP/2 | HTTP/3 |

|---|---|---|---|

| Features | |||

| Persistent Connections | Introduced, allows reusing connections | Yes, multiplexes requests over a single connection | Yes, multiplexes requests over a single connection |

| Chunked Transfer Encoding | Supported | Yes, allows data to be sent in multiple chunks | Yes, supports chunked transfer encoding |

| Host Header | Introduced | N/A (maintains compatibility) | N/A (maintains compatibility) |

| Multiplexing | Not supported | Yes, allows multiple requests to be sent in parallel over the same connection | Yes, using QUIC, with independent streams |

| Server Push | Not supported | Yes, allows servers to push resources proactively | Yes, allows servers to push resources proactively |

| Header Compression | Not supported | Yes reduces overhead with HPACK | N/A (built-in mechanisms for reliability) |

| Strengths | |||

| Backward Compatibility | Compatible with existing infrastructure | Maintains backward compatibility with HTTP/1.1 | Maintains compatibility with HTTP/1.1 |

| Improved Performance | Limited due to lack of multiplexing | Yes, multiplexing and header compression boost performance | Yes, reduced latency with QUIC, independent streams |

| Efficient Resource Utilization | Limited due to lack of multiplexing | Yes reduces the overhead of establishing multiple connections | Yes, improves resource utilization with independent streams |

| Reduced Latency | Limited due to lack of multiplexing | Yes, reduces latency with multiplexing and header compression | Yes, further reduces latency with QUIC |

| Limitations | |||

| Head-of-Line Blocking | Present, subsequent requests may be blocked | Present, though mitigated with multiplexing | Reduced with QUIC’s independent streams |

| Security Vulnerabilities | No built-in encryption or authentication | No built-in encryption or authentication | Requires TLS for security (HTTPS) |

| Complexity | Low | Increased complexity with multiplexing and header compression | Increased complexity with QUIC and UDP |

| Compatibility Issues | Compatibility with older infrastructure may be limited | Compatibility with older infrastructure may be limited | Compatibility with older infrastructure may be limited |

All things considered, HTTP/2 and HTTP/3 provide considerable gains over HTTP/1.1 regarding speed, efficiency, and lower latency; adding QUIC to HTTP/3 brings even more advantages. Each version, however, has advantages and disadvantages, and several variables, including performance objectives, compatibility requirements, and the particular requirements of the website or application influence the choice of protocol.

Real-world examples illustrating performance differences between Protocols

Numerous websites and web apps provide real-world examples of how HTTP/1.1, HTTP/2, and HTTP/3 function differently. Here are a few typical situations:

-

Website Loading Speed: Websites using HTTP/1.1 load resources sequentially via separate TCP connections, which increases delay, especially when transferring data over distant servers or unstable networks. In contrast, HTTP/2 dramatically lowers load times for complicated websites by using multiplexing to fetch numerous resources at once over a single TCP connection. With QUIC powering HTTP/3, latency is further reduced by eliminating head-of-line blocking and enhancing congestion control, which leads to quicker loading times, especially on cellular networks or in situations with heavy packet loss.

-

Streaming and Real-Time Communication: Because HTTP/2 and HTTP/3 offer server push and multiplexing, streaming services—like video and music platforms—can benefit from improved performance. This makes it possible to distribute media content more effectively, which leads to buffering reduction and smoother playback. Based on QUIC, HTTP/3 offers reduced latency, which is advantageous for real-time communication applications like chat rooms and multiplayer games. This improves the user experience by enabling faster response times and data delivery.

-

Mobile Web Browsing: Issues like excessive network latency and restricted bandwidth are common for mobile users. Header compression and connection multiplexing are two mobile-specific enhancements provided by HTTP/2 and HTTP/3 that can enhance performance and cut down on data consumption. Furthermore, HTTP/3’s usage of QUIC can be especially helpful for mobile users who regularly switch between networks (such as cellular and Wi-Fi), as it allows for more dependable transmission over erratic connections and faster connection creation.

-

E-commerce and Transactional Websites: For e-commerce websites and other transactional platforms to guarantee a seamless user experience, clients and servers need to communicate quickly and reliably. Optimizing the distribution of product pictures, payment processing, and other dynamic content using HTTP/2 and HTTP/3 can achieve faster checkout speeds and higher conversion rates.

Overall, users will likely have a better overall web browsing experience with HTTP/1.1, HTTP/2, and HTTP/3 because of the newer protocols’ substantial increases in speed, efficiency, and dependability, even though the performance differences between them may vary based on particular use cases and network conditions.

Examples of websites that use HTTP/1.1, 2 and 3

However, it can be difficult to pinpoint particular websites that only use HTTP/1, HTTP/2, or HTTP/3 because many websites support multiple protocols at once or switch between protocols depending on many factors like server capabilities, network conditions, and browser support. Nonetheless, the following websites are instances of those who have tried or implemented these protocols:

-

HTTP/1: It’s possible that a large number of older or less updated websites still primarily utilize HTTP/1, particularly if they haven’t been updated for newer protocols. Smaller blogs, individual webpages, or older services that still use HTTP/1 are not uncommon. While blogging services like WordPress, Medium, and Blogger continue to use HTTP/1.1 for their primary website delivery, news websites like CNN, BBC News, and The New York Times still utilize it for content delivery.

-

HTTP/2: To make use of HTTP/2’s multiplexing, header compression, and server push features, large-scale websites and platforms that place a high priority on performance and user experience frequently implement it. A few websites that use HTTP/2 are Google (including YouTube, Gmail, and Google Search), Facebook, X (Twitter), Amazon and Netflix.

-

HTTP/3: Based on the QUIC protocol, HTTP/3 is a relatively modern technology that may still be being adopted by large websites. Nonetheless, a few businesses and services have started testing HTTP/3 in live settings. Cloudflare, Google and Fastly are a few examples.

Protocol Recommendations for Website Development

The HTTP protocol to choose while creating a website or online application is determined by several criteria, including security concerns, compatibility issues, and performance requirements. Based on various scenarios, the following recommendations have been made:

-

Performance-Critical Applications: Use HTTP/2 or HTTP/3 for performance-critical applications where decreasing latency and speeding up page loads are crucial. Compared to HTTP 1.1, these protocols’ features—like multiplexing, header compression, and server push—can greatly improve performance.

-

Compatibility and Support: Since HTTP/1.1 is extensively supported and works with almost all web servers, clients, and proxies, it is still a good choice if compatibility with outdated infrastructure or browsers is an issue. However, remember that HTTP/2 and HTTP/3 are still compatible with HTTP/1.1, so switching to these more recent protocols should be very easy.

-

Security Requirements: If your website or application prioritizes security, you should think about utilizing HTTP/2 or HTTP/3 with TLS (HTTPS). HTTP/2 and HTTP/3 offer more security features and performance enhancements that can improve your site’s overall security posture, even though HTTP/1.1 can also be secured with HTTPS.

-

Mobile-Friendly Applications: QUIC-powered HTTP/3 is a competitive option for mobile-friendly applications where users may frequently transfer between different networks or have restricted bandwidth. It is well-suited for mobile environments due to its rapid connection setup, independent streams, and integrated dependability measures, which improve both performance and user experience.

-

Complexity and Resource Constraints: Think about how difficult it will be to manage and implement various protocols, particularly if you don’t have a lot of resources or experience. Even though HTTP/2 and HTTP/3 are significantly faster than HTTP/1.1, they also come with more complexity. Determine whether your team has the knowledge and tools needed to carry out and uphold these protocols.

-

Future-Proofing: Considering how online protocols and technologies are always changing, you might want to think about using HTTP/2 or HTTP/3 to future-proof your application or website. These protocols provide cutting-edge functionality and speed boosts that can help you future-proof your website and make sure it stays competitive in the web’s always-shifting landscape.

Conclusion

In conclusion, the selection of the HTTP protocol ought to be predicated on a meticulous assessment of the demands for performance, compatibility, security, and available resources. HTTP/1.1 is still a good option for older systems. However, HTTP/2 and HTTP/3 provide much faster speeds, lower latency, and better security features.

Every protocol—HTTP/1.1, HTTP/2, and HTTP/3—has advantages and disadvantages of its own. Multiplexing, header compression, and server push are included in HTTP/2 to increase efficiency and performance. With QUIC powering HTTP/3, latency is further decreased, head-of-line blocking is lessened, and dependability is improved—particularly for real-time communication and mobile environments.

In the end, a variety of criteria, including resource limitations, compatibility needs, and performance requirements, determine the protocol of choice. While HTTP/2 and HTTP/3 offer contemporary capabilities and additions that can future-proof websites and apps, HTTP/1.1 is still a reliable option for legacy systems.

In the battle of the protocols, HTTP/2 and HTTP/3 emerge as champions, paving the way for a faster, more reliable, and more secure web experience worldwide.