Creating a Node API with Knex and PostgreSQL

One of the challenges of working with databases like PostgreSQL is managing the complexity of SQL queries required to interact with the database. With Knex.js, you can easily create complex queries to select, insert, update, and delete data from a database and create and modify database tables and indexes. In this article, you will explore how to use Knex.js to build applications that leverage PostgreSQL without writing raw SQL queries.

Knex.js is a JavaScript query builder for relational databases that can be used with Node.js. It provides a straightforward interface for building and executing SQL queries in JavaScript, supporting multiple database systems, such as PostgreSQL. PostgreSQL is a popular relational database system widely used in modern web applications and other software systems.

Setting up your Development Environment

To use PostgreSQL, you must first install it on your system. Navigate to the PostgreSQL website and select your operating system to download PostgreSQL. Alternatively, you can use PostgreSQL on the cloud by leveraging platforms such as ElephantSQL, which offers PostgreSQL as a service.

After setting up your PostgreSQL database, create a new project directory by running the command below:

mkdir blog-knex-tutorialNext, cd into your newly created project directory and run the command below to initialize npm in your project directory:

npm init -yThe -y flag initializes npm with all its defaults.

To use Knex with PostgreSQL, you need to install some dependencies. Install the required dependencies by running the command below:

npm install -g knex

npm install pg express dotenvNext, create a .env file in your project’s root directory and store your database credentials.

For example:

//.env

//URI

DATABASE_URI =

//Credentials

DATABASE_NAME =

DATABASE_PASSWORD =Setting up an Express Server

This tutorial will feature a simple blog API to demonstrate combining Knex and PostgreSQL with Node.js to build a Node.js application.

First, create an index.js file in your project’s root directory and add the code block to it:

//index.js

const express = require("express");

const app = express();

const port = 3000;

app.use(express.json());

app.listen(port, () => {

console.log(`App listening on port:${port}`);

});The code block above creates a simple express server. It listens on port 3000 and uses the express.json() middleware to parse incoming JSON requests.

Configuring Knex with PostgreSQL in your Application

Before using Knex in your application, you have to initialize and configure it with the database driver of your choice, in this case, PostgreSQL.

Run the command below to initialize Knex in your application.

knex initThe command above creates a knexfile.js configuration file containing settings for connecting to a database, such as a database type, host, port, username, password, and other configuration options.

By default, the knexfile.js file this command generates will have sqlite3 as its development database. So to use PostgreSQL, replace your current knexfile.js with the code block below:

// Update with your config settings.

require("dotenv").config();

/**

* @type { Object.<string, import("knex").Knex.Config> }

*/

module.exports = {

development: {

client: "pg",

connection: process.env.DATABASE_URI,

migrations: {

directory: "./db/migrations",

}

}

};The code block above configures Knex to use PostgreSQL as its database client. It also specifies the database connection with environmental variables and the file path where your migration files will be stored.

Next, create a db folder in your project directory by running the command below:

mkdir dbThen, create a db.js file in your db folder and import knex and your knexFile.js file.

Like so:

//db/db.js

const knex = require("knex");

const knexFile = require("../knexfile.js");Next, add the code block below to your db.js file:

//db/db.js

const environment = process.env.NODE_ENV || "development";

module.exports = knex(knexFile[environment]);The code block above sets the environment variable to either the NODE_ENV environment variable or development if NODE_ENV is not set. This lets you specify different configurations for different environments, such as development, production, or testing.

Finally, the module.exports statement exports a configured Knex.js instance using the configuration settings from knexFile[environment]. This instance can create database tables, insert data, run queries, and perform other database-related operations in JavaScript code.

Creating Migration Files

Migration files are files you can use to manage changes to the database schema. When you create a new table, modify an existing table, or add new columns, you must modify the database schema to reflect these changes. However, modifying a database schema can be complex and error-prone, especially when working with large or complex databases.

By using migration files, you can define the changes you want to make in a migration file instead of manually modifying the database schema. When you run the migration file using Knex, it automatically applies the changes to the database schema, ensuring that the changes are made consistently and correctly.

To create a migration file, run the command below:

knex migrate:make blogThe command above creates a “blog” (you can replace the blog argument with your preferred migration name) migration file in the file path specified in your knexFile.js file (db/migrations).

Next, open your migration file and replace the up function with the code block below:

exports.up = function (knex) {

//Create a table called "blog" with the following columns: id, title, content, author, created_at, updated_at

return knex.schema.createTable("blog", (table) => {

table.increments("id").primary(); //id column with auto-incrementing primary key

table.string("title").notNullable(); //title column with type string

table.text("content").notNullable(); //content column with type text

table.string("author").notNullable(); //author column with type string

table.timestamps(true, true); //created_at and updated_at columns with type timestamp

});

};The code block above, when executed, creates a blog table in your PostgreSQL database with the tables specified above.

Next, replace the down function with the code block below:

exports.down = function (knex) {

// Drop the "blog" table if it exists

return knex.schema.dropTableIfExists("blog");

};The code block above, when executed, drops the blog table in your PostgreSQL database, which is the opposite of what the up function does.

To run the migrations, run the command below on your terminal:

knex migrate:latestThe command above goes through all your migration files and runs the up function.

To undo the migrations, run the command below:

knex migrate:rollbackThe command above goes through all your migration files and runs the down function.

Session Replay for Developers

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — an open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.

Creating CRUD Endpoints

To better organize your code, create a routes folder in your project’s root directory and create a blog.js file.

Next, in your blog.js file, import express, your Knex configuration, and set up the Express Router. Like so:

const express = require("express");

const db = require("../db/db.js");

const router = express.Router();In your blog.js file, you can add CRUD endpoints to interact with your database. The tutorial will feature queries that get all the blogs, get a blog(s) based on a condition, update a blog, and delete a blog from the database.

Getting all Blogs

To implement the logic for getting all blog instances from your database, add the code block below to your blog.js file:

router.get("/blog", async (req, res) => {

try {

const blogs = await db.select("*").from("blog");

res.send(blogs);

} catch (error) {

res.status(500).json({ error: error.message });

}

});The code block above returns all the blog instances in your database.

Getting Blogs Conditionally

To implement the logic for getting a blog instance conditionally from your database, add the code block below to your blog.js file:

router.get("/blog/:id", async (req, res) => {

const { id } = req.params;

try {

const blogs = await db("blog").where({ id });

if (blogs.length !== 0) {

res.send(blogs);

} else {

res.status(404).json({ error: "Blog not found" });

}

} catch (error) {

res.status(500).json({ error: error.message });

}

});The code block above returns a blog instance based on a given id.

Adding a new Blog to your Database

To implement the logic for adding a new blog instance to your database, add the code block below to your blog.js file:

router.post("/blog/new", async (req, res) => {

const { title, content, author } = req.body;

try {

const blog = await db("blog").insert({ title, content, author });

res.status(201).send(blog);

} catch (error) {

res.status(500).json({ error: error.message });

}

});The code block above adds a new blog to your database.

Updating an Existing Blog

To implement the logic that updates a blog in your database, add the code block below to your blog.js file:

router.put("/blog/:id", async (req, res) => {

const { id } = req.params;

const { title, content, author } = req.body;

try {

const blog = await db("blog")

.where({ id })

.update({ title, content, author }, ["id", "title", "content", "author"]);

if (blog.length !== 0) {

res.status(201).send(blog);

} else {

res.status(404).json({ error: "Blog not found" });

}

} catch (error) {

res.status(500).json({ error: error.message });

}

});The code block above updates an existing blog based on a given id.

Deleting a Blog

To implement the logic that deletes a blog from your database, add the code block below to your blog.js file:

router.delete("/blog/:id", async (req, res) => {

const { id } = req.params;

try {

const blog = await db("blog").where({ id }).del();

if (blog) {

res.status(204).send();

} else {

res.status(404).json({ error: "Blog not found" });

}

} catch (error) {

res.status(500).json({ error: error.message });

}

});The code block above deletes a blog based on a given id.

Finally, add the code block below to your blog.js file to export your router.

module.exports = router;Testing your Application

First, navigate to your index.js, import your router, and add it as a middleware. Like so:

//index.js

const blogRouter = require("./routes/blog.js");

app.use(blogRouter);Next, start up your application by running the command below:

node index.jsTo test your application, you can use tools such as Postman to make HTTP requests to your API and verify that it returns the expected results.

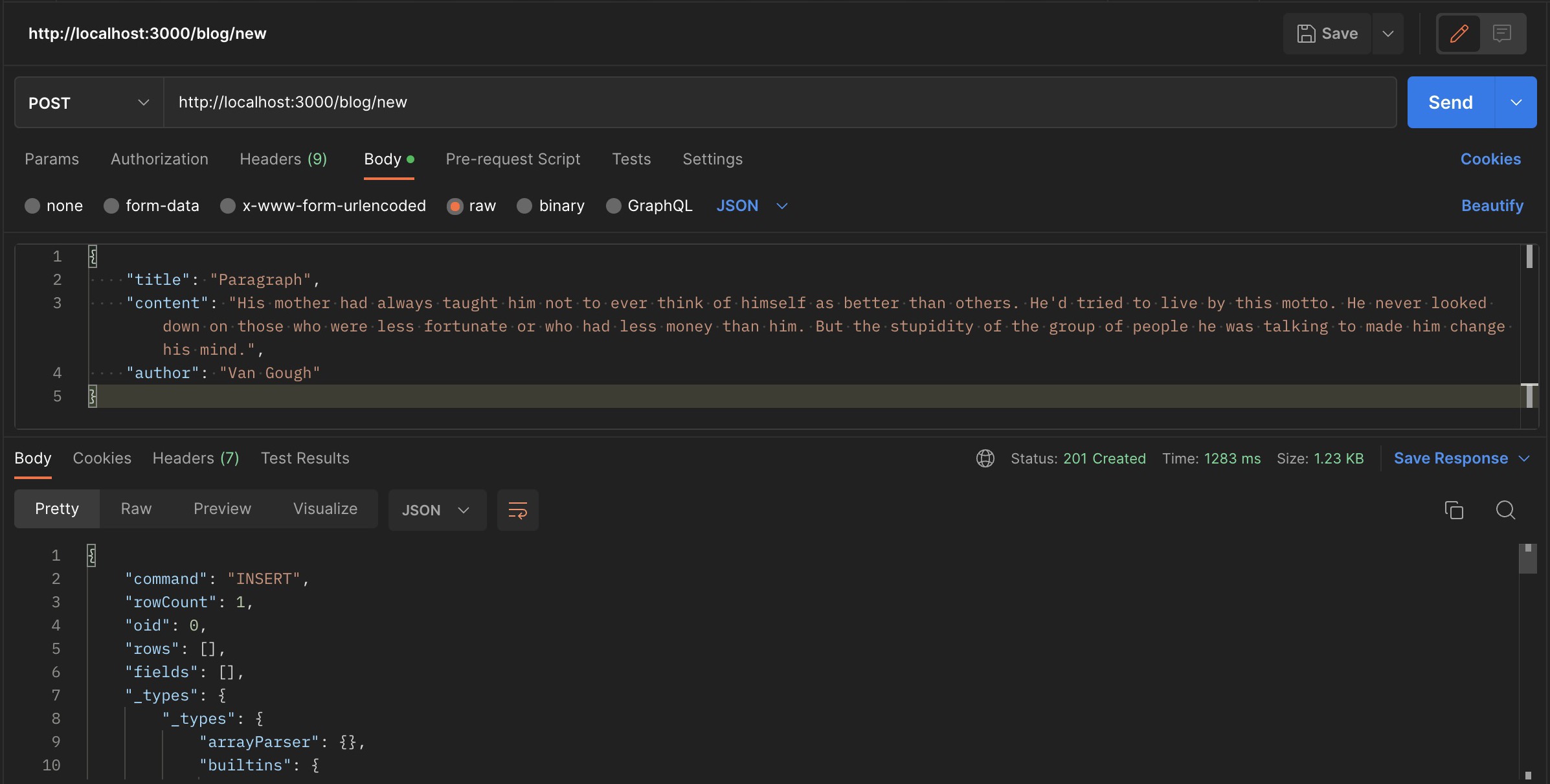

For example, you can make a POST request to http://localhost:3000/blog/new with the data below:

{

"title": "Paragraph",

"content": "His mother had always taught him not to ever think of himself as better than others. He'd tried to live by this motto. He never looked down on those who were less fortunate or who had less money than him. But the stupidity of the group of people he was talking to made him change his mind.",

"author": "Van Gough"

}If you make the request on Postman, your results should be similar to the image below.

Conclusion

In this article, you learned how to use Knex.js with PostgreSQL to build a Node.js API. You also learned how to configure Knex to use PostgreSQL as its database client, connect to a PostgreSQL database locally and remotely, and make queries using Knex.

With the knowledge gained in this article, you can now build robust and scalable Node.js applications that leverage the power of PostgreSQL and the simplicity of Knex.js.