Maximize JavaScript's Performance WITHOUT Transducers

In the previous article on transducers we saw a way to optimize long sequences of operations on arrays, but the solution wasn’t that trivial. Many readers wondered whether we could achieve the same result more simply, and this article will tackle that: we won’t use transducers (as we did before) but with the help of some functional programming, we’ll get equally good solutions.

Discover how at OpenReplay.com.

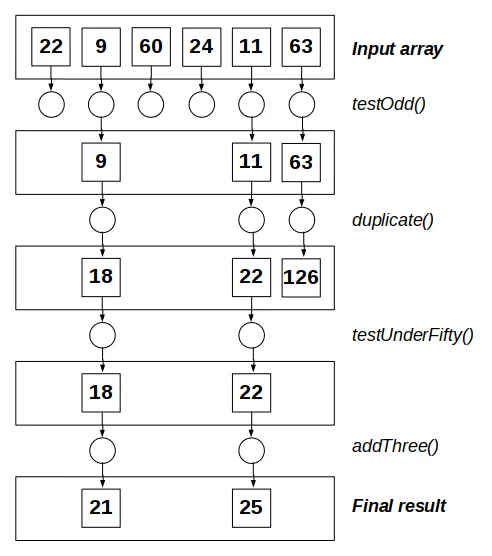

Let’s remember our original problem — which was a trivial example, meant to highlight the issues we had. We had an array, and we wanted to (1) take only odd values, (2) duplicate them, (3) keep values under 50, and (4) add 3 to them. The following code achieved this:

const testOdd = (x) => x % 2 === 1;

const duplicate = (x) => x + x;

const testUnderFifty = (x) => x < 50;

const addThree = (x) => x + 3;

const myArray = [22, 9, 60, 24, 11, 63];

const newArray = myArray

.filter(testOdd)

.map(duplicate)

.filter(testUnderFifty)

.map(addThree``

console.log(newArray); // [21,25]What’s the problem here? Every time you apply an operation, a new intermediate array is created, and with large amounts of data, the extra time may be significant.

In the previous article, we described a solution based on trying to go array element by array element and applying all transformations in order, and we developed transducers for that. But we may make do with something simpler! Let’s see that.

A first (but not too good) solution

Our example was straightforward, and some readers objected by reasoning that it was pretty clear that the only values of the original array that we’d pick would be odd ones, under 25, and then we would duplicate them and add three, so a simple loop could do the job… and we wouldn’t even need the original four functions we used!

const simplifiedPass = (someArray) => {

const result = [];

someArray.forEach((value) => {

if (value % 2 && value < 25) {

result.push(value * 2 + 3);

}

});

return result;

};

const newArray2 = simplifiedPass(myArray);

console.log(newArray2);This works and is perfectly correct — but it’s hard to generalize. With our simple example, deducing which numbers would be processed was easy, but in real life, the logical checks could be very complex, and you wouldn’t be able to reduce them to a single test as we did here. So, while this shows that optimization is doable, the method itself cannot be applied in every case; we need a more general solution.

A second (also handcrafted) solution

As in the previous case, we can do better if we go element by element and apply maps and filters in sequence.

const singlePassFourOps = (someArray) => {

const result = [];

someArray.forEach((value) => {

let ok = false; // ①

if (testOdd(value)) {

value = duplicate(value);

if (testUnderFifty(value)) {

value = addThree(value);

ok = true;

}

}

if (ok) { // ②

result.push(value);

}

});

return result;

};

const newArray3 = singlePassFourOps(myArray);

console.log(newArray3); // [ 21,25 ]We use an ok variable ① to learn if a value from the original array passed all tests ②. Each filter() is transformed into an if, and each map() updates value. If we reach the end of the operations, we set ok to true, which means we’ll push the final value into the result array. This works! However, we had to write a function from scratch, and we may be able to achieve a more general solution.

A third (and more general) solution

The previous code requires writing a function for each set of transformations. However, what about passing all the mapping and filtering functions in an array? This would mean we could write a general function that could deal with any combination of filters and maps.

There’s a problem, though: how will we know whether a function in the array is meant to be a filter or a map? Let’s have an array with pairs of values: first an “M” or “F”, meaning “map” or “filter”, and then the function itself. Testing the first value of the pair will let us know what to do with the second value, the function.

const singlePassManyOps = (someArray, fnList) => {

const result = [];

someArray.forEach((value) => { // ①

if (

fnList.every(([type, fn]) => { // ②

if (type === "M") { // ③

value = fn(value);

return true;

} else {

return fn(value); // ④

}

})

) {

result.push(value);

}

});

return result;

};

const newArray4 = singlePassManyOps(myArray, [

["F", testOdd],

["M", duplicate],

["F", testUnderFifty],

["M", addThree],

]);

console.log(newArray4);This works: we go through the array, element by element ①. For each function in the array ②, if it’s a map ③ we update value, and otherwise we test whether to go on or not ④. Why are we using every() instead of foreach()? The key is that we want to stop going through the array of functions as soon as a filter fails, but foreach() doesn’t have a nice way to stop the loop. On the other hand, every() stops looping as soon as a false result is encountered — which will happen if a filtering function returns false. (And, by the way, that’s why we return true after a mapping, so every() won’t stop.) This is going better! Can we make it simpler?

A fourth (and simpler) solution

We can simplify the work by writing a pair of functions to help us produce the needed array; given a function, they will produce the right pair of values.

const applyMap = (fn) => ["M", fn];

const applyFilter = (fn) => ["F", fn];With these functions, we can change the code above to the following.

const newArray5 = singlePassManyOps(myArray, [

applyFilter(testOdd),

applyMap(duplicate),

applyFilter(testUnderFifty),

applyMap(addThree),

]);

console.log(newArray5);This is nicer, and also declarative in aspect: you clearly see we first apply a filter (testOdd), then a map (duplicate), etc., in the same sequence as in our original code. Also, performance is optimal; we don’t have any intermediate arrays, and we don’t perform any unnecessary filters or maps. We’re done! However…

The fifth (and definitive) solution

In the previous article, we mentioned we wouldn’t always want to finish by producing an array; we could want to apply a final reduce() operation. We can fix this by making the initial value of result and the function that updates it optional, with predefined default values.

const singlePassMoreGeneral = (

someArray,

fnList,

initialAccum = [],

reduceAccumFn = (accum, value) => {

accum.push(value);

return accum;

}

) => {

let result = initialAccum; // ①

someArray.forEach((value) => {

if (

fnList.every(([type, fn]) => {

if (type === "M") {

value = fn(value);

return true;

} else {

return fn(value);

}

})

) {

result = reduceAccumFn(result, value); // ②

}

});

return result;

};

const newArray6 = singlePassMoreGeneral(myArray, [

applyFilter(testOdd),

applyMap(duplicate),

applyFilter(testUnderFifty),

applyMap(addThree),

]);

console.log(newArray6);Apart from adding initialAccum (the initial value of the accumulator for the reducing operation) and reduceAccumFn (the reducer function itself) the other changes are the initial value for result ① and the way result is updated ②. We can test this by finding the sum of the final values.

const newValue = singlePassMoreGeneral(

myArray,

[

applyFilter(testOdd),

applyMap(duplicate),

applyFilter(testUnderFifty),

applyMap(addThree),

],

0,

(x, y) => x + y

);

console.log(newValue);This worked! We can now apply any sequence of mapping and filtering operations optimally, optionally finishing with a reducing step if desired.

Summary

We started the article trying to find a simpler, though equally powerful, way of optimizing a sequence of operations on a large array. We went from a very simple solution, which wasn’t general enough, to a final solution that’s fully equivalent to the transducers’ one, although arguably easier to understand, despite also using functional programming in many ways. This goes to show the validity of the idea that “there’s more than one way to skin a cat”- or, in our case, “to optimize array processes”!

Understand every bug

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — the open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.