Setting up GitLab CI/CD Pipelines for Front-End Projects

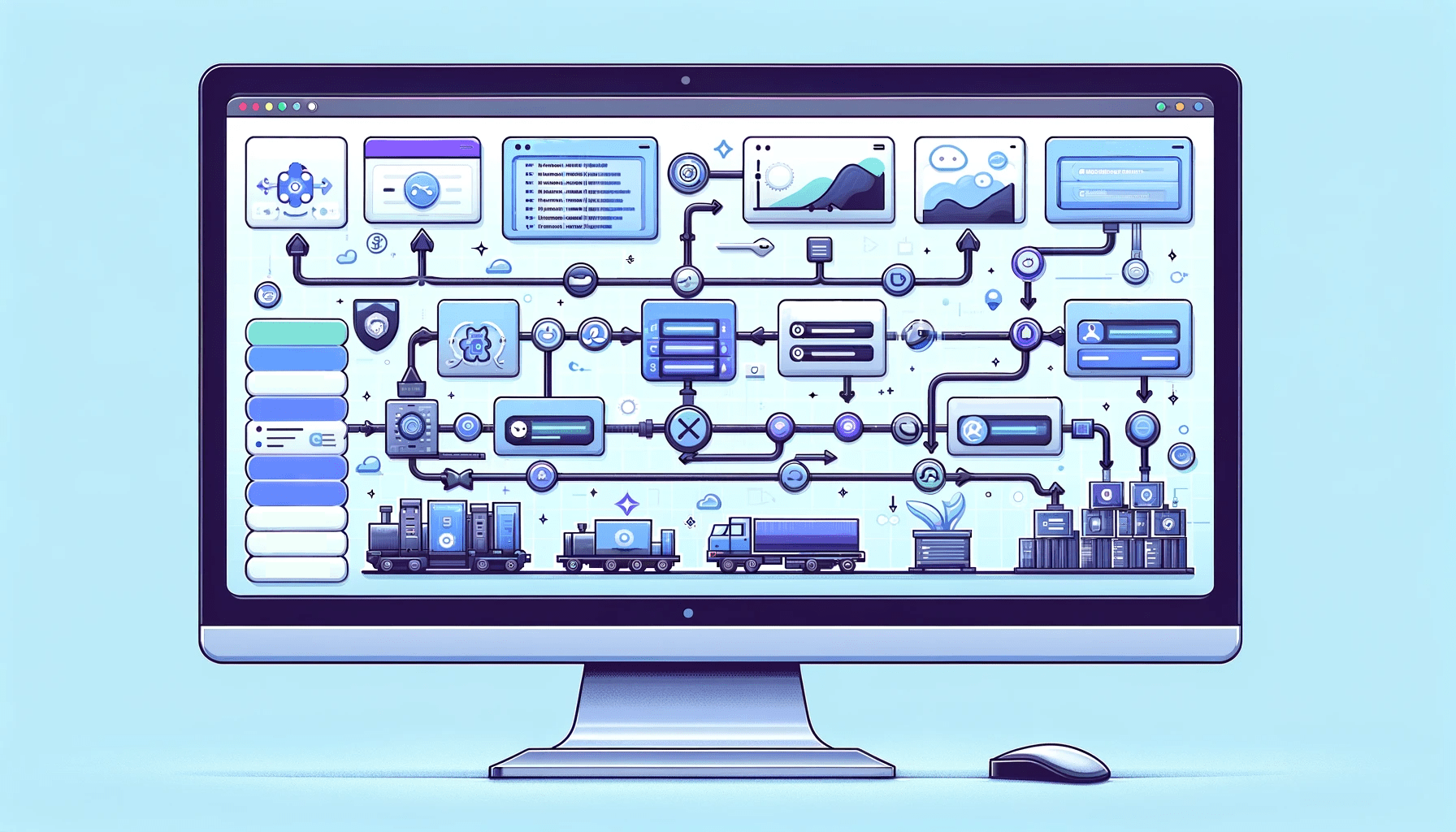

Welcome to the world of seamless development and deployment with GitLab CI/CD tailored specifically for front-end projects. GitLab CI/CD, an integral part of the GitLab platform, revolutionizes how we build, test, and deploy code. In a nutshell, the engine powers continuous integration and continuous deployment, simplifying complex workflows into automated pipelines, and this article will show you everything about it.

Discover how at OpenReplay.com.

GitLab CI/CD orchestrates your software delivery process, ensuring that your code undergoes rigorous testing and seamlessly moves through different environments before reaching production. Imagine a well-coordinated dance where every move, from writing code to deploying it, is choreographed effortlessly.

Stages:

- build

- test

- deployIn the above code snippet, we define the stages our pipeline will go through building the project, running tests, and finally deploying the application. GitLab CI/CD uses a simple YAML file, .gitlab-ci.yml, as the blueprint for these pipelines. At the beginning of a block, including the language, as in yaml.

Significance in Front-End Development

Why is this important for front-end developers, then? Imagine that each time you push modifications to your front-end repository, GitLab CI/CD starts a sequence of events. It builds your code, tests it to find errors early, and then publishes your masterwork if everything checks out. It’s like having a conscientious assistant take care of the tiresome parts—no more manual steps and no more forgetting to run tests.

GitLab CI/CD becomes your dependable ally in front-end development, where user experience is critical, and you must ensure your web applications are aesthetically pleasing and functionally sound. So grab a seat as we set out to use GitLab to demystify and streamline your front-end continuous integration and delivery process. Start the automation now!

GitLab Repository Setup

Setting up your GitLab repository is the starting point for our CI/CD journey. This is where the magic of version control and collaboration kicks in. In this section, we’ll walk you through creating and configuring your repository, laying a solid foundation for seamless CI/CD integration.

Creating and Configuring Repository

By setting up the repository, let’s lay the groundwork for our GitLab CI/CD adventure. Head over to GitLab, click that “+” button, and create a new repository. Simple, right? But wait, there’s a twist. The real magic lies in configuring your repository settings.

# Clone your repository

git clone <your_repository_url>

# Navigate into your project folder

cd <your_project_folder>

# Initialize Git in your project

git init

# Add the GitLab repository as a remote

git remote add origin <your_repository_url>In this snippet, we start by cloning your fresh repository and setting up the Git remote. Now, let’s talk about those settings. GitLab allows you to customize your repository’s behavior. Do you want to restrict who can contribute? Enable issues and merge requests? It’s all in the settings. Go on, tailor it to suit your project’s needs.

Front-End Project Branching Strategy

Branching in GitLab CI/CD is like choosing different lanes in a race. You don’t want your experimental features crashing into your stable code, right? Let’s adopt a branching strategy that keeps everything smooth.

# Create a new branch for your feature

git checkout -b feature/my-awesome-feature

# Work on your feature and commit changes

git add .

git commit -m "Implement my awesome feature"

# Push the branch to GitLab

git push origin feature/my-awesome-featureHere, we create a feature branch, work on our dazzling feature, and then push it to GitLab. This strategy keeps our main branch clean and ready for deployment. With these setup steps, our GitLab repository becomes the canvas where our CI/CD masterpiece will unfold. Ready to dive deeper into the CI/CD pool? Let’s swim into the next phase!

GitLab CI/CD Configuration

Get ready to unleash the power of automation! In this section, we dive into the fundamentals of .gitlab-ci.yml. This YAML file is your ticket to orchestrating CI/CD pipelines. We’ll cover defining stages, jobs, and playing with variables – all the essential elements to make your CI/CD symphony sing.

.gitlab-ci.yml Fundamentals

Time to breathe life into your CI/CD dreams by understanding the .gitlab-ci.yml file. It’s like the maestro’s sheet music, guiding the performance of your pipeline. Let’s dive into the essentials.

# .gitlab-ci.yml

stages:

- build

- test

- deployIn this snippet, we’re defining the stages our pipeline will dance through building, testing, and deploying. Each stage represents a phase in your CI/CD process. Simple, right? Now, let’s get to the nitty-gritty of jobs.

Defining Stages and Jobs

Jobs are the dancers on your CI/CD stage, each with a specific role. Check out this snippet that defines a build job:

build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run buildHere, we’ve named our job build, assigned it to the build stage, and provided a script to execute. In this case, we’re using npm to install dependencies and build our front end. The stage ensures that jobs run in a specific order, creating a harmonious flow.

Variables and Environments

Now, let’s add some flexibility to our CI/CD symphony with variables and environments. Variables store values you might reuse across jobs.

variables:

NODE_ENV: "production"

deploy_production:

stage: deploy

script:

- echo "Deploying to production"

- npm install

- npm run deploy

environment:

name: production

url: https://yourproductionurl.comHere, NODE_ENV is a variable defining the environment as production. In the deployment job, we use it to set the Node.js environment. The environment block specifies where your application is deployed, making it easy to track in the GitLab UI.

Understanding these fundamentals unlocks the potential of your CI/CD pipeline. As your code takes center stage, the configuration orchestrates a flawless performance.

Front-end Build and Tests

Building the front end is where your code transforms into a tangible masterpiece. Join us as we configure build jobs, run unit tests, and ensure the brilliance of your front-end code. This section is about crafting a seamless build process and verifying the quality of your work.

Configuring Build Jobs

Picture this as constructing the foundation of a skyscraper; a solid build ensures a stable structure.

build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run buildHere, our build job is a series of commands executed in sequence. We echo a message (just for fun), install dependencies with npm install, and then execute the build script with npm run build. Adjust this script to match your front-end setup—Webpack, Gulp, or whatever tooling magic you wield.

Unit Tests and Code Quality Checks

Now that our build foundation is rock-solid, it’s time to ensure our code behaves as expected. Enter unit tests and code quality checks, our vigilant guardians against bugs and messy code.

test:

stage: test

script:

- echo "Running unit tests"

- npm install

- npm run test

- echo "Checking code quality"

- npm run lintThis test job follows the ‘build’ stage. We install dependencies, run unit tests with npm run test, and check code quality using a linter with npm run lint. If any test fails or quality issues arise, our CI/CD pipeline will catch them, preventing subpar code from reaching the spotlight.

With this configuration, our front end is not just built; it’s fortified with tests and quality checks, ensuring a spectacular performance on any screen.

Deployment Strategies

Now that your front end is polished, it’s showtime! Discover deployment strategies, from development to staging, and the grand production rollout considerations. We’ll guide you through creating environments and ensuring a smooth transition from development to your user’s screens.

Development and Staging Environments

Let’s talk about development and staging environments—our rehearsal stages.

deploy_dev:

stage: deploy

script:

- echo "Deploying to development"

- npm install

- npm run deploy:dev

environment:

name: development

url: https://yourdevurl.comIn this deploy_dev job, we’re deploying to the development environment. Adjust the script to match your deployment process—maybe it’s copying files to a server or uploading to a cloud platform. The environment block in GitLab CI/CD adds a touch of elegance, providing a dedicated space in the GitLab UI to monitor your development deployments.

Considerations for Production Deployment

As the curtain rises on the production stage, meticulous planning is essential. Safety nets, checks, and double-checks ensure our audience experiences a flawless performance.

deploy_production:

stage: deploy

script:

- echo "Deploying to production"

- npm install

- npm run deploy:prod

environment:

name: production

url: https://yourproductionurl.comHere, deploy_production takes center stage. The script includes production-specific deployment steps. Remember, production is where your code faces the world, so be cautious. GitLab CI/CD empowers you to define distinct deployment strategies for each environment, mitigating risks and ensuring a smooth transition from rehearsal to the spotlight.

With these deployment strategies in place, your front-end project is not just code; it’s a live performance, evolving seamlessly through development, staging, and production.

Containerization Integration

Ever thought of encapsulating your front-end magic in a container? Docker is here to make it happen! Join us in exploring Docker for front-end projects, building and pushing Docker images, and orchestrating container deployment. Containerization adds a touch of consistency and portability to your front-end show.

Docker for Front-end Projects

Containerizing your front-end project can be complex. Developers may find it challenging to understand why and how Docker is used in this context.

To simplify the process, we utilize Docker to encapsulate the front end, isolating it with its dependencies. The Dockerfile acts as a set of instructions to construct a portable container for our application.

# Dockerfile

# Use an official Node.js runtime as a base image

FROM node:14

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy package.json and package-lock.json to the container

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the local project files to the container

COPY . .

# Build the front-end

RUN npm run build

# Specify the command to run on container start

CMD [ "npm", "start" ]This Dockerfile breakdown explains each step, from choosing a Node.js base image to setting up the working directory and executing commands to install dependencies and build the front end.

Building and Pushing Docker Images

Developers might struggle with the build and push process, unsure of why these steps are necessary.

In our CI/CD pipeline, the docker_build job automates the creation of a Docker image and pushes it to a registry. Building compiles the application within the Docker image, and pushing ensures this image is available for deployment.

docker_build:

stage: build

script:

- echo "Building Docker image"

- docker build -t your-docker-registry/your-project:latest .

- echo "Pushing Docker image"

- docker push your-docker-registry/your-project:latestThis script clarifies the significance of each command, demystifying the build and push process.

Container Orchestration in Deployment

Deploying a Docker container may seem like a black box for some developers.

In deploy_production, we pull the Docker image and run it in detached mode. This script emphasizes the orchestration of deploying the Docker container in a production environment.

deploy_production:

stage: deploy

script:

- echo "Deploying Docker container to production"

- docker pull your-docker-registry/your-project:latest

- docker run -d -p 80:80 --name your-project your-docker-registry/your-project:latest

environment:

name: production

url: https://yourproductionurl.comAdjust the port mappings and container name based on your application’s requirements.

With Docker in the mix, our front end becomes portable, scalable, and consistent across various environments. The curtain rises on a new act, showcasing the power of containerization in our GitLab CI/CD symphony.

Advanced CI/CD Configurations

Let’s take your CI/CD game to the next level! In this section, we’ll explore advanced configurations – parallel jobs for efficiency, conditional job execution, and customizing pipeline triggers. It’s time to optimize your pipeline for peak performance and efficiency.

Parallel Jobs for Efficiency

In the world of CI/CD, bottlenecks can hinder the speed of your pipeline, much like a sluggish engine that needs fine-tuning. Without parallelization, tasks in your pipeline might be processed sequentially, leading to idle time and delayed deployments.

Parallel jobs are the turbo boosters your CI/CD engine needs. They enable tasks to run concurrently, tackling the bottleneck issue head-on. Imagine it as unleashing multiple engines in your car, making it zoom through stages simultaneously. In this section, we’ll explore how parallel jobs significantly enhance pipeline efficiency, enabling a faster and more responsive CI/CD process.

stages:

- build

- test

- deploy

.build_template: &build_template

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

.job1:

<<: *build_template

.job2:

<<: *build_templateHere, build and test jobs run concurrently, cutting down the pipeline’s total execution time. Adjust the parallelism based on your project’s needs.

Understanding Parallel Jobs: In our CI/CD pipeline, the build stage involves tasks like installing dependencies and building the front end. These tasks are independent and don’t rely on each other, making them suitable for parallelization.

Importance of Parallelization:

Parallel jobs enhance pipeline efficiency by allowing tasks to be performed simultaneously. In this example, .job1 and .job2 execute the same build template concurrently, reducing the overall time required for the build stage.

What Tasks Can Run in Parallel: Tasks that are independent, such as building different parts of the project or running tests for different modules, can be parallelized. However, tasks with dependencies should be sequenced appropriately to avoid conflicts.

stages:

- build

- test

- deploy

.build_template: &build_template

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

.test_template: &test_template

stage: test

script:

- echo "Running unit tests"

- npm install

- npm run test

.job1:

<<: *build_template

.job2:

<<: *test_templateIn this example, .job1 represents a parallel task for building, and .job2 represents a parallel task for testing.

Conditional Job Execution

Imagine a pipeline that adapts to your code’s needs. Conditional job execution allows you to run jobs only when certain conditions are met.

stages:

- build

- test

- deploy

Build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

Test:

stage: test

script:

- echo "Running unit tests"

- npm install

- npm run test

deploy_dev:

stage: deploy

script:

- echo "Deploying to development"

- npm install

- npm run deploy:dev

environment:

name: development

url: https://yourdevurl.com

only:

- branches

except:

- masterIn this example, deploy_dev runs only for branch pushes and excludes the master branch. Tailor these conditions to match your branching strategy.

Customizing Pipeline Triggers

Customizing pipeline triggers allows you to dictate when your pipeline kicks into action. Maybe you want it to run only for specific events like pushes or tags.

stages:

- build

- test

- deploy

Build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

Test:

stage: test

script:

- echo "Running unit tests"

- npm install

- npm run test

deploy_dev:

stage: deploy

script:

- echo "Deploying to development"

- npm install

- npm run deploy:dev

environment:

name: development

url: https://yourdevurl.com

only:

- tagsIn deploy_dev, the pipeline triggers only for tag events. Customize triggers to align with your release strategy.

These advanced configurations elevate your CI/CD setup from a basic script to a dynamic, adaptive system. Tune, tweak, and witness your pipeline evolve into a finely tuned orchestra.

Monitoring and Reporting

Every show needs a vigilant crew backstage. This section introduces tools for monitoring and analyzing pipeline reports and gracefully handling failures. Gain insights into your CI/CD workflow and ensure flawless performance every time.

Tools for Monitoring

Ensuring your CI/CD process runs smoothly is akin to having a backstage crew. Monitoring tools serve as your vigilant crew members, keeping an eye on the performance of your pipelines. Integrate tools like Prometheus or Grafana to gather insights into your CI/CD workflows.

stages:

- build

- test

- deploy

variables:

PROMETHEUS_URL: http://your-prometheus-url

monitoring:

stage: deploy

script:

- echo "Sending metrics to Prometheus"

- curl -X POST -d "job=ci-cd&status=success" $PROMETHEUS_URL

only:

- masterIn this monitoring job, we send a success status to Prometheus after a successful deployment. Adapt this to your monitoring tool of choice, ensuring it aligns with your pipeline stages.

Analyzing Pipeline Reports

A successful performance demands a review of the script. Pipeline reports act as your post-show analysis, providing insights into what went well and what needs improvement.

stages:

- build

- test

- deploy

build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

artifacts:

paths:

- Build/

test:

stage: test

script:

- echo "Running unit tests"

- npm install

- npm run test

artifacts:

reports:

junit: junit.xml

deploy_dev:

stage: deploy

script:

- echo "Deploying to development"

- npm install

- npm run deploy:dev

environment:

name: development

url: https://yourdevurl.com

artifacts:

paths:

- Build/Here, each job produces artifacts—files or reports that capture crucial information. junit.xml is a common report format for test results. Leverage these artifacts for thorough post-deployment analysis.

Handling Failures and Rollbacks

Even the best performances encounter hiccups. Handling failures gracefully and orchestrating rollbacks ensures a resilient CI/CD process.

stages:

- build

- test

- deploy

deploy_dev:

stage: deploy

script:

- echo "Deploying to development"

- npm install

- npm run deploy:dev || (echo "Rolling back" && npm run rollback:dev)

environment:

name: development

url: https://yourdevurl.comIn deploy_dev, we attempt deployment and, if it fails, execute a rollback script. Customize the rollback script based on your application’s requirements.

Monitoring, analyzing reports, and gracefully handling failures are the final acts of our CI/CD performance. With these practices, your pipeline evolves into a resilient and well-monitored system, ensuring a standing ovation for every deployment.

Best Practices and Optimization

It’s time to fine-tune your CI/CD orchestra! Discover GitLab CI/CD best practices, optimize pipeline performance, and prioritize security considerations. Follow these guidelines to ensure your CI/CD setup transforms into a well-tuned symphony, delivering reliable and secure deployments.

GitLab CI/CD Best Practices

Let’s distill the essence of smooth CI/CD orchestration into a set of best practices. Keep your .gitlab-ci.yml file clean and modular, avoiding unnecessary complexity. Leverage variables for reusable values, enhancing maintainability.

variables:

NODE_VERSION: "14"

BUILD_DIR: "build/"

stages:

- build

- test

- deployHere, we define variables for Node.js version and build directory, promoting consistency across the pipeline. Adopt a clear naming convention for jobs and stages, making your CI/CD symphony easy to understand and follow.

Pipeline Performance Optimization

Optimizing your pipeline is akin to fine-tuning an instrument for a flawless performance. Parallelize jobs strategically to reduce overall execution time.

stages:

- build

- test

- deploy

.build_template: &build_template

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run build

job1:

<<: *build_template

job2:

<<: *build_templateHere, we use YAML anchors to create a reusable template for the build stage. Jobs job1 and job2 leverage this template, running in parallel. This optimization enhances the efficiency of your pipeline.

Security Considerations

Security is non-negotiable in any performance. Safeguard your CI/CD process by protecting sensitive information using GitLab CI/CD variables.

stages:

- build

- test

- deploy

variables:

NODE_ENV: "production"

DOCKER_REGISTRY_USER: $CI_REGISTRY_USER

DOCKER_REGISTRY_PASSWORD: $CI_REGISTRY_PASSWORD

API_KEY:

secure: "your-encrypted-api-key"

build:

stage: build

script:

- echo "Building the front-end"

- npm install

- npm run buildHere, we use CI/CD variables for sensitive data like Docker registry credentials and encrypt the API key for added security.

By adhering to these best practices, optimizing pipeline performance, and prioritizing security, your GitLab CI/CD setup transforms into a well-tuned orchestra, delivering reliable and secure deployments.

Conclusion

As the curtain falls on our CI/CD journey, let’s recap the key steps, emphasize continuous improvement, and share closing thoughts on front-end CI/CD with GitLab. Your CI/CD symphony deserves a standing ovation, and we’ll leave you with insights to continue refining and evolving your front-end performance.

Recap of Key Steps

We started by creating and configuring our GitLab repository, establishing a solid foundation. Then, we delved into the fundamentals of the .gitlab-ci.yml file, orchestrating our CI/CD symphony with build, test, and deploy stages. The integration of Docker brought portability and consistency to our front end, transforming it into a well-packaged performance.

stages:

- build

- test

- deployOur pipeline danced through development, staging, and production environments, embracing parallelism, conditional executions, and custom triggers for a dynamic performance. Monitoring, analyzing reports, and gracefully handling failures ensured a resilient show.

Continuous Improvement Emphasis

But the journey doesn’t end here. The heart of CI/CD lies in continuous improvement. Embrace the GitLab CI/CD best practices, optimize your pipeline, and prioritize security. Iteration is the key to a refined and ever-evolving orchestration.

variables:

NODE_VERSION: "14"

BUILD_DIR: "build/"Closing Thoughts on Front-end CI/CD with GitLab

In the realm of front-end development, GitLab CI/CD becomes your silent maestro, orchestrating deployments seamlessly. The collaborative power of GitLab combined with the automation of CI/CD empowers developers to focus on crafting remarkable user experiences. As you navigate the landscape of continuous integration and continuous deployment, remember, the show must go on – with GitLab CI/CD, it will, flawlessly. Bravo on completing this performance, and here’s to the continuous evolution of your front-end symphony!

References

Here’s a backstage pass to the resources that fueled our journey through setting up GitLab CI/CD pipelines for front-end projects:

-

GitLab Documentation: Your go-to guide for GitLab CI/CD configurations and features.

-

Docker Documentation: Unpack the secrets of containerization with Docker’s official documentation.

-

Node.js Documentation: Dive into the world of Node.js for building robust front-end applications.

-

Continuous Integration vs. Continuous Deployment: Understand the concepts that underpin CI/CD workflows.

-

Prometheus and Grafana: Explore the powerful duo for monitoring your CI/CD pipelines.