JavaScript SEO best practices

JavaScript is a prevalent programming language used by more than 80 percent of renowned stores across the United States. There are, however, some unique processes and issues that could arise with SEO JavaScript websites. Thus, it’s essential to adhere to the best practices at all times.

The relationship between SEO and JavaScript has been a long-debated topic. Understanding SEO basics has become a critical task for SEO professionals. Most developing websites use JavaScript as their programming language. It makes use of excellent frameworks to build web pages as well as controls the various page elements. Here’s what Google’s webmaster is saying:

The JavaScript frameworks were first implemented on the client side only while inviting much trouble in client-side rendering. It has been recently embedded in host software and on the server side of web servers to minimize trouble. Moreover, this initiative has paved the way for pairing JavaScript with SEO practices to boost the search engine value of web pages written in JavaScript.

JavaScript SEO - What is It?

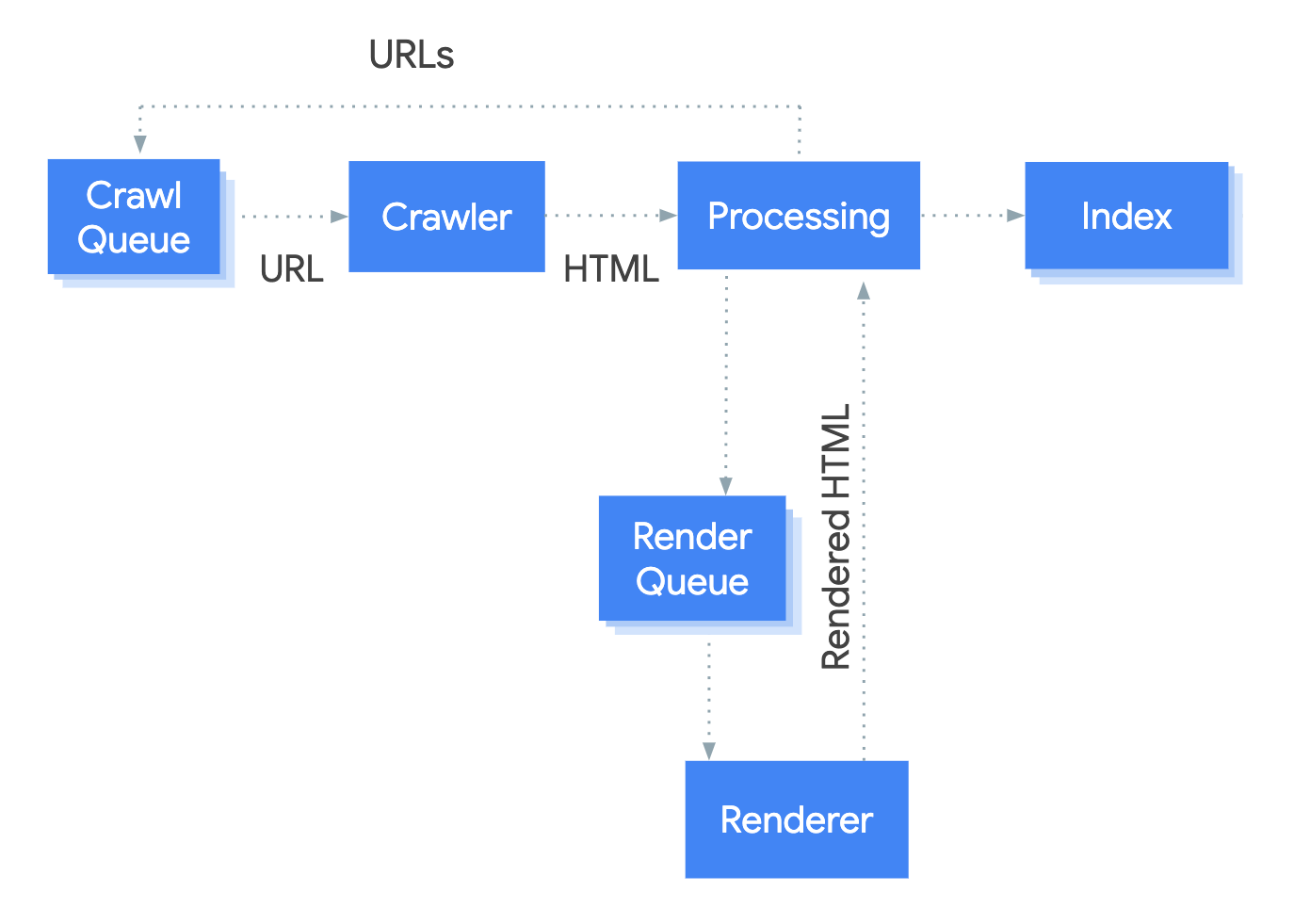

JavaScript SEO focuses on optimizing websites built with JavaScript to be visible in search engines. Primarily, it concerns content optimization, ensures that web pages are discoverable by search engines by using the best practices, boosts page load times, and so on. Let’s see first how the Google search engine processes JavaScript.

Image Source: developers.google.com

To a modern web, JavaScript is essential since it makes it scalable and easier to maintain. JavaScript SEO is primarily concerned with the following:

- Preventing, troubleshooting, and diagnosing ranking problems for single page applications and website built on JavaScript frameworks, which include Angular, React, and Vue.

- Optimization of content injected through JavaScript for rendering, crawling, and indexing by search engines.

- Enhancing page load times for pages executing JavaScript code for a streamlined user experience.

- Make sure that the web pages are discoverable by search engines by following the best practices.

JavaScript SEO Issues

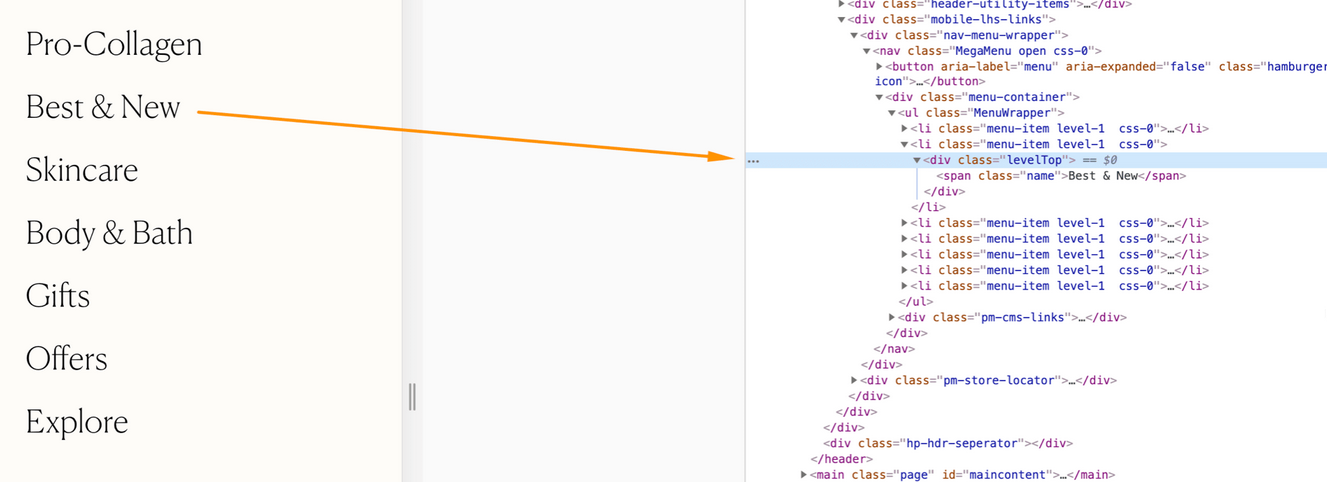

Navigation

With JavaScript SEO, the navigation of the website is not crawlable. This means that navigation links are not in adherence to the web standards; thus, Google could not see or follow them because:

- The authority within the website is not distributed appropriately.

- It’s more difficult for Google to discover internal pages.

- Establishing relationships between the pages within the website is unclear.

This results in a website with links that Google could not follow. Here’s an example from Searchenginejournal about a Navigation issue.

Image Source: searchenginejournal.com

Internal Links

Aside from injecting content into the DOM, JavaScript could also impact the crawlability of links. Crawling links on pages enables Google to discover new pages. Google specifically recommends as a best practice to link pages with HTML anchor tags with href attributes and also include descriptive anchor texts for hyperlinks.

Google does not navigate from one page to another as a regular user would. Instead, it downloads a stateless page version, meaning that it will not catch any changes on a page, which rely on what occurs on the previous page. Is the link affected by this?

Yes. If the links depend on action, Google will be unable to find the particular link and won’t be able to find all the pages on the website. Here’s an example of bad linking practice.

<a onclick=”goTo(‘page’)”>nope, no href</a>

<a href=”javascript:goTo(‘page’)”>nope, missing link</a>

<a href=”javascript:void(0)”>nope, missing link</a>Client-Side Rendering

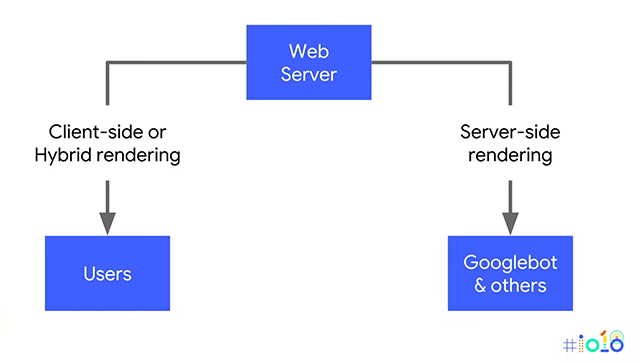

Websites built with Angular, React, Vue, and other frameworks of JavaScript all are set by default as a CSR or client-side rendering. However, the issue is that Google crawlers cannot see what’s on the page; they will only see a blank page. Check out an example below from Google’s I/O.

One solution is to use a more traditional choice, server-side rendering or SSR. However, when you use SSR, you could lose the user experience advantages that you could only get with CSR. It’s recommended to use prerendering, which verifies every user agent that requests a page.

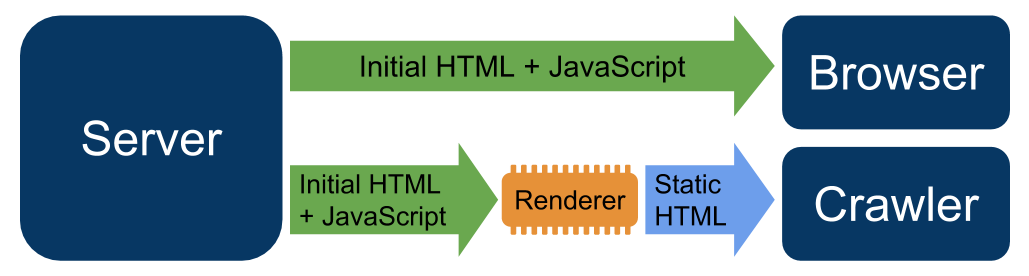

When you understand how client-side rendering works, it becomes easier to see why problems in search engine optimization happen. For server-side rendering, dynamic rendering is an alternative and viable solution for serving a website to users with JavaScript content generated in the browser but a static Googlebot version. Here’s how dynamic rendering works.

Image Source:developers.google.com

Content duplication

There is a possibility of several URLs for the same content with JavaScript, which could cause duplicate content problems. The duplication can be caused by IDs, capitalization, parameters with IDs, etc. Content duplication could be eliminated from the downloaded HTML before it is sent to rendering.

The HTML response with app shell models shows very little content and code. Every page on the website could display the same code, which could be the same code shown on several websites.

In some instances, this could cause pages to be considered duplicates and will not go to rendering right away. The worst thing is that the wrong website or a wrong page could appear on the search results. This will eventually resolve on its own but could cause issues, particularly with newer websites.

If you’re using old AJAX crawling, keep in mind that it is already deprecated and, thus, no longer supported.

Session Replay for Developers

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — an open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.

JavaScript SEO Best Practices

The best practices enable making the most and the best of JavaScript SEO. What are these best practices? Let’s check them out.

Server-Side Rendering

Server-side rendering is the process of retrieving a web page, launching code, and asses the design and structure of a page. Furthermore, rendering could happen in several ways: server-side, client-side, and dynamic. SSR or server-side rendering is the process of rendering web pages via your own servers.

Example code of website having SSR

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Example of Server Side Rendering Website</title>

</head>

<body>

<h1>Demo Website</h1>

<p>This is an example of new SSR website</p>

<p>This is just a demo website.html</p>

</body>

</html>In CSR, the process is accomplished through the browser of the user. Dynamic rendering, on the other hand, occurs through a third-party server. The most significant benefit to rendering is the fast way pages can be rendered. Server-side rendering also ensures that all the page elements are being rendered.

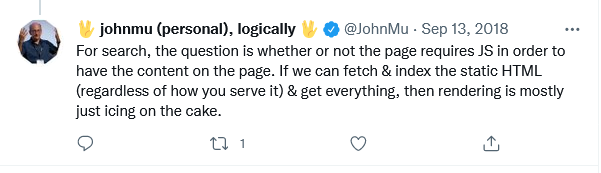

JavaScript Content Indexing

Getting Google to index JavaScript content is one of the more common technical issues faced by SEO. Using JavaScript across the web is growing fast, and many websites struggle to drive organic growth. Look at what Google’s John Mueller is saying about JS-injected websites.

When working on websites developed with JavaScript frameworks, inevitably, you would face various issues for those using WordPress and other popular content management system platforms. Nevertheless, to succeed in search engines, you should know precisely how to check if the website pages can be indexed and rendered, determine problems, and make them search engine friendly. You can use tools like screaming frog and also can able to check Google Status in the Google search console.

404 Errors in Single-Page Apps

When it comes to Single-Page applications, refrain from using Soft 404 Errors. Instead, consider redirecting to a not found page when you encounter a 404 error when making HTTP requests. This way, Google will know that it’s a 404 page.

Example of a proper redirection with this approach.

fetch(`/api/items/${itemId}`)

.then(response => response.json())

.then(item => {

if (items.exists) {

showItem(item);

} else {

window.location.href = '/not-found';

}

})HTTP Status Codes

Consider using meaningful HTTP status codes since Google uses it to find out if there is something wrong when crawling a page. Use a meaningful status code, such as 404, for a page that could not be found to tell Googlebot if a page cannot be indexed or crawled. HTTP codes could also be used to tell Googlebot if a page has moved to a new URL so that the index could be accordingly updated.

Conclusion

To ensure the success of websites, particularly business ones, making sure that the audience can access and read content is paramount. Google has ranked the pages at the top of the search engines for easy accessibility. There is enough technology to make sure that the website looks terrific.

However, web visibility will go down a hundredfold if search engines cannot access that content. Thus, SEO should work and adapt to technical limitations to boost traffic and visibility for business profitability.

A TIP FROM THE EDITOR: For more on SEO, look at our SEO Tips For Next.Js Sites article.